Abstract

Recent text-to-image generative models have enabled us to transform our words into vibrant, captivating imagery. The surge of personalization techniques that has followed has also allowed us to imagine unique concepts in new scenes. However, an intriguing question remains: How can we generate a new, imaginary concept that has never been seen before? In this paper, we present the task of creative text-to-image generation, where we seek to generate new members of a broad category (e.g., generating a pet that differs from all existing pets). We leverage the under-studied Diffusion Prior models and show that the creative generation problem can be formulated as an optimization process over the output space of the diffusion prior, resulting in a set of "prior constraints". To keep our generated concept from converging into existing members, we incorporate a question-answering Vision-Language Model (VLM) that adaptively adds new constraints to the optimization problem, encouraging the model to discover increasingly more unique creations. Finally, we show that our prior constraints can also serve as a strong mixing mechanism allowing us to create hybrids between generated concepts, introducing even more flexibility into the creative process.

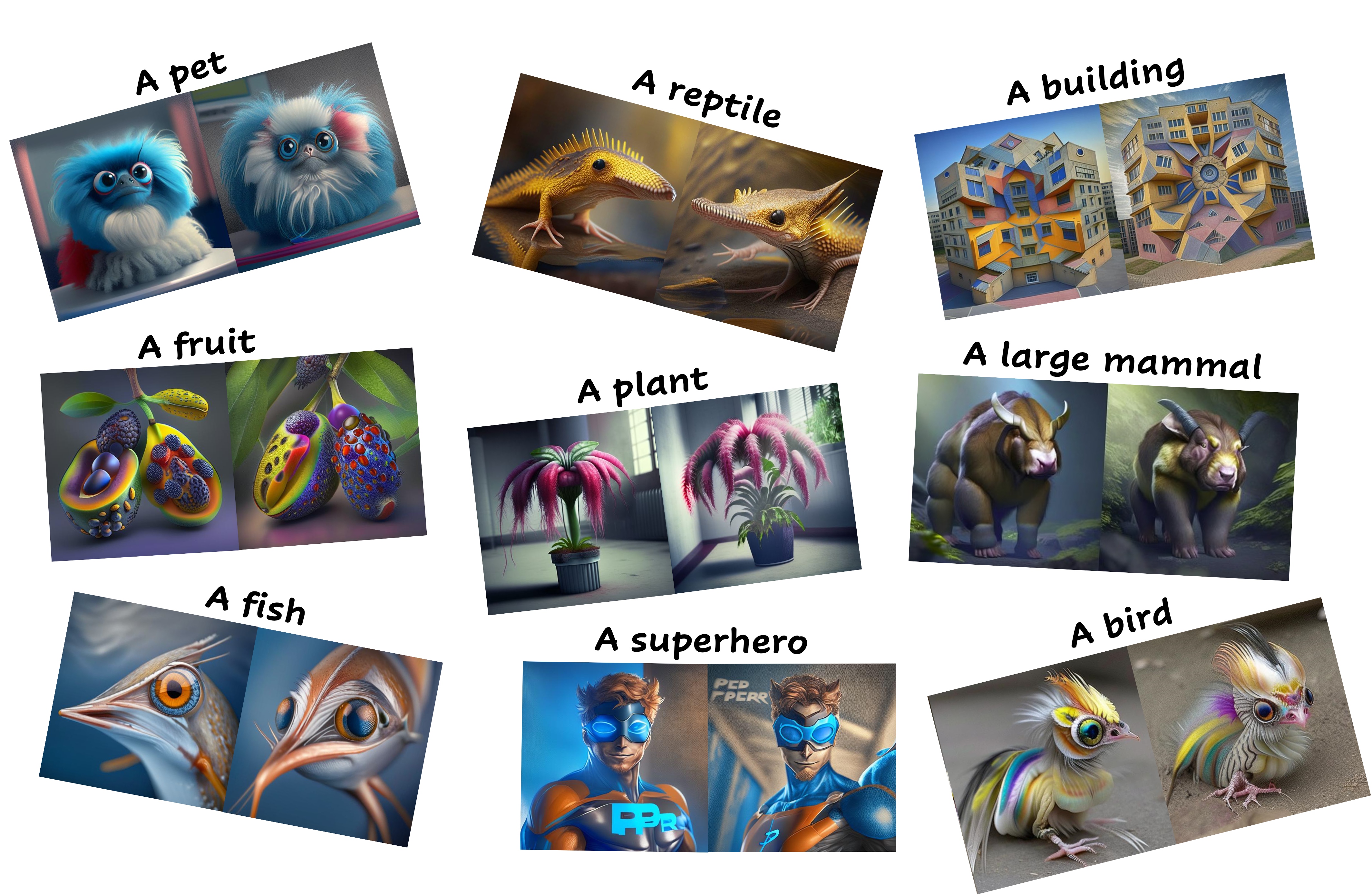

Examples of Concepts Generated with ConceptLab

A New Pet

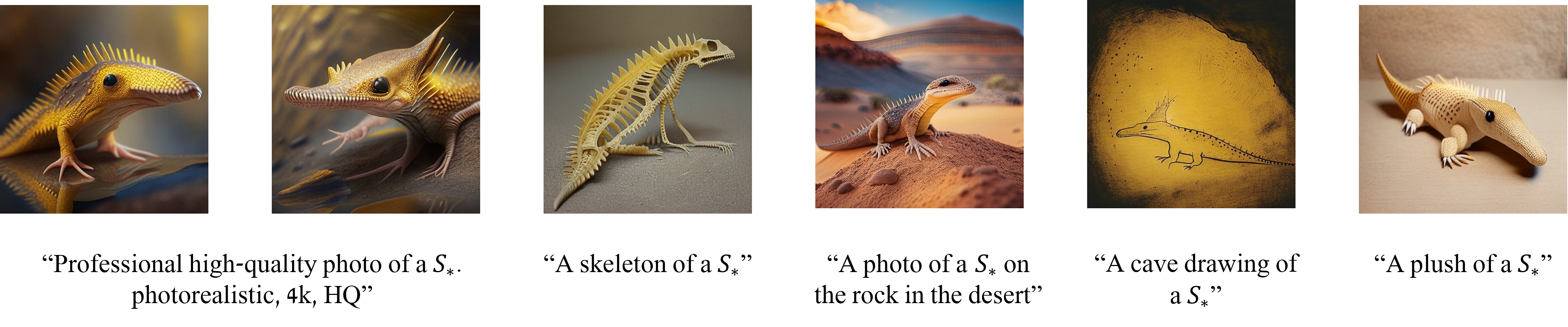

A New Reptile

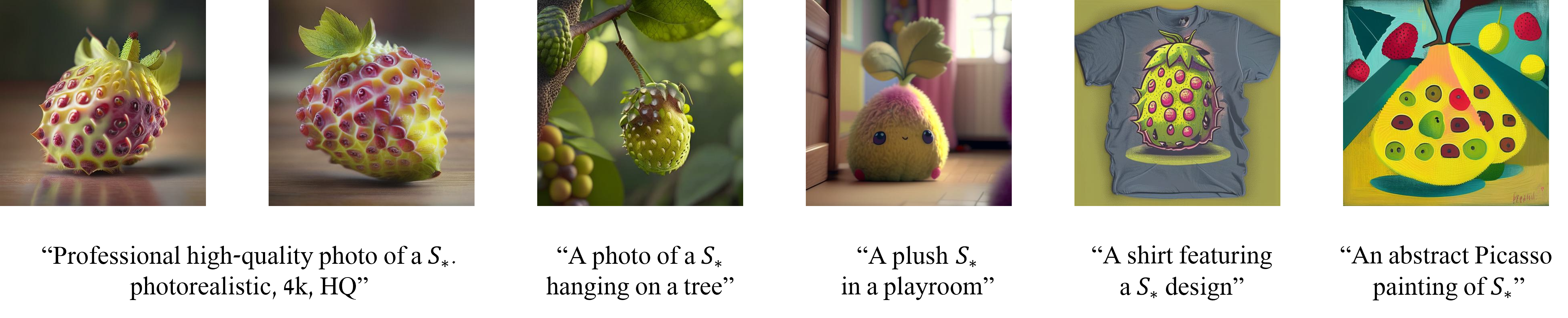

A New Fruit

A New Superhero

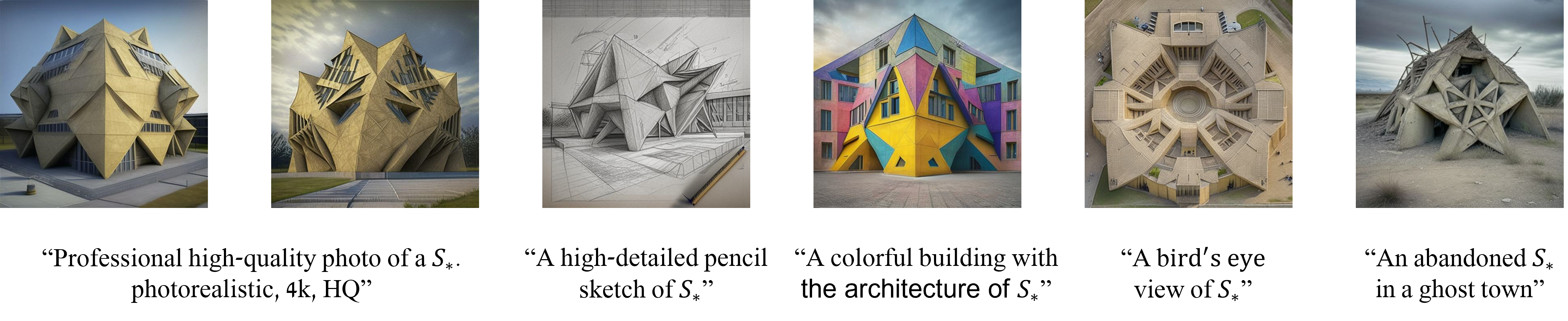

A New Building

A New Mammal

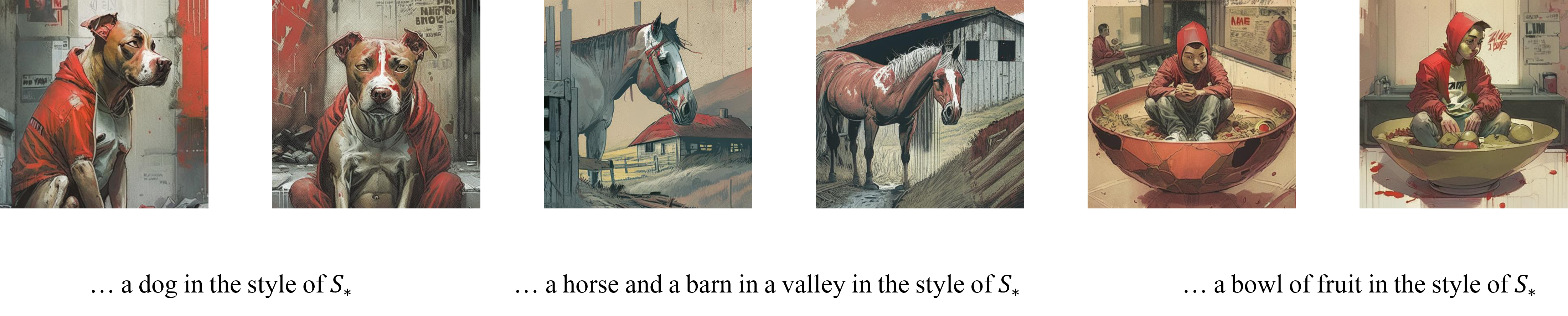

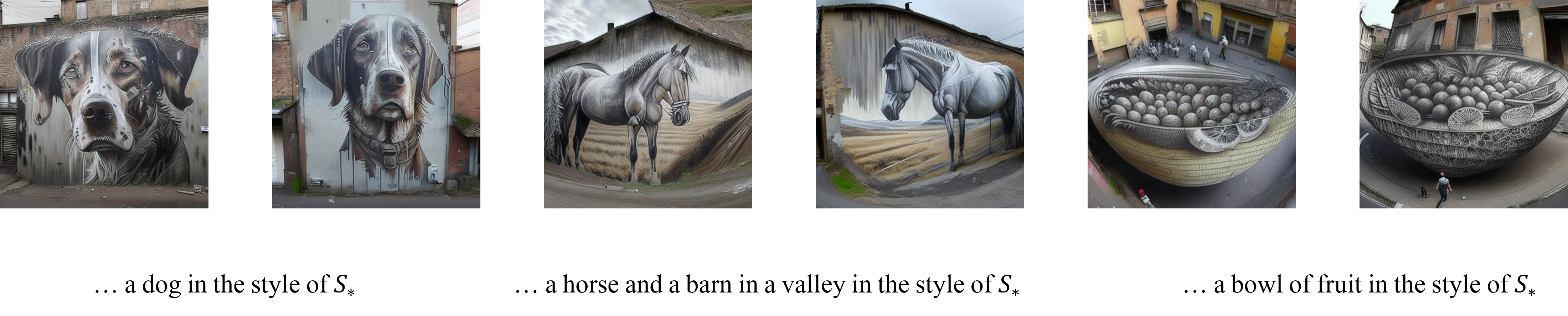

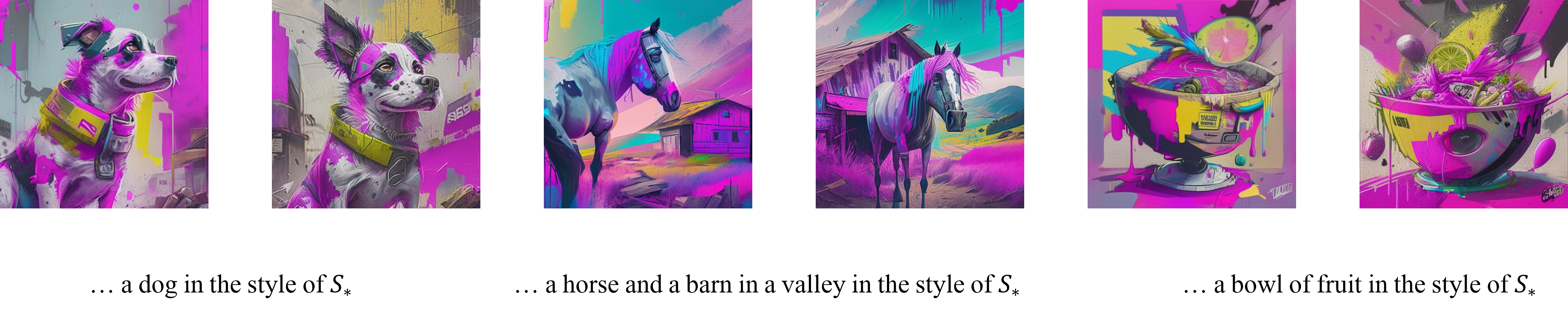

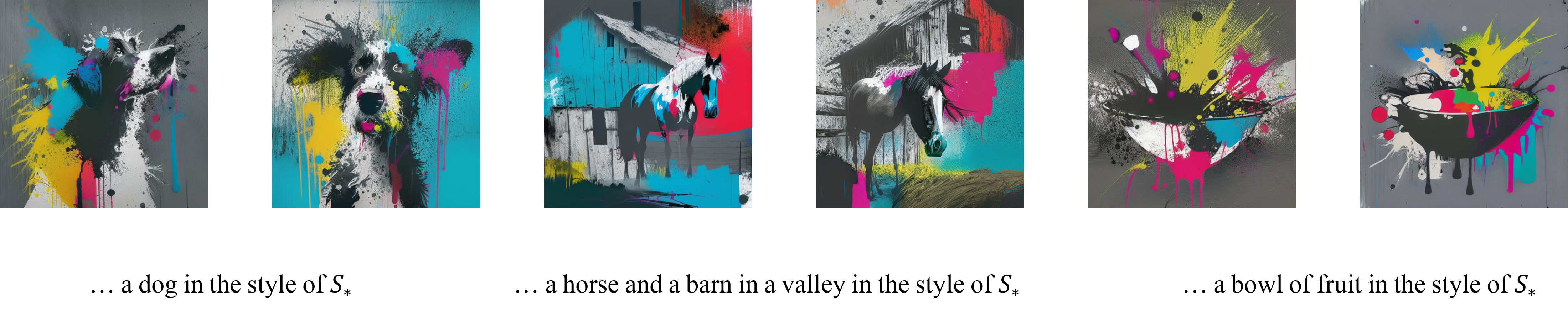

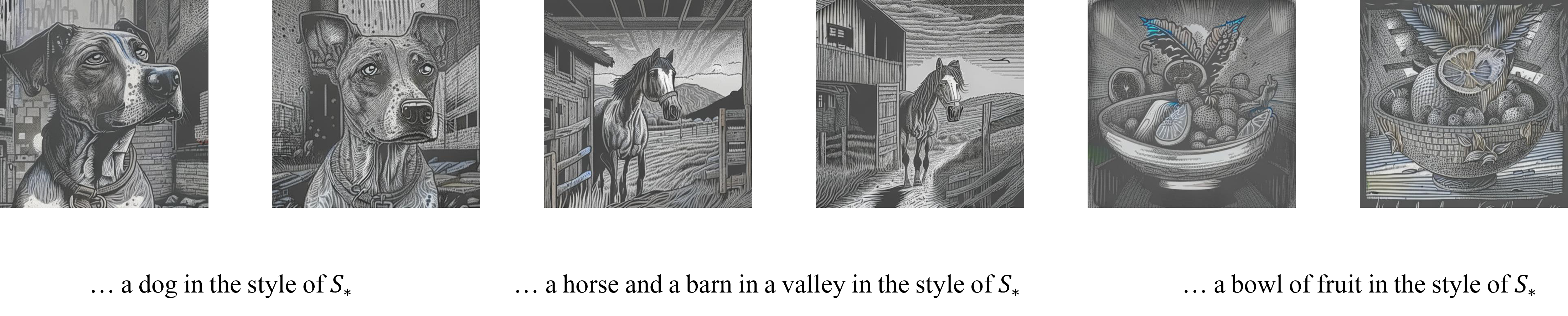

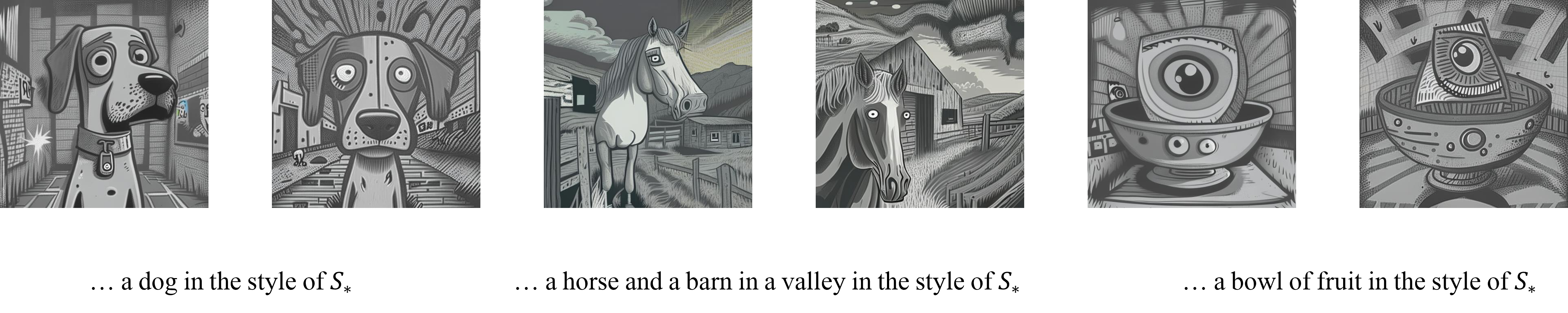

Examples of Art Styles Generated with ConceptLab

How Does it Work?

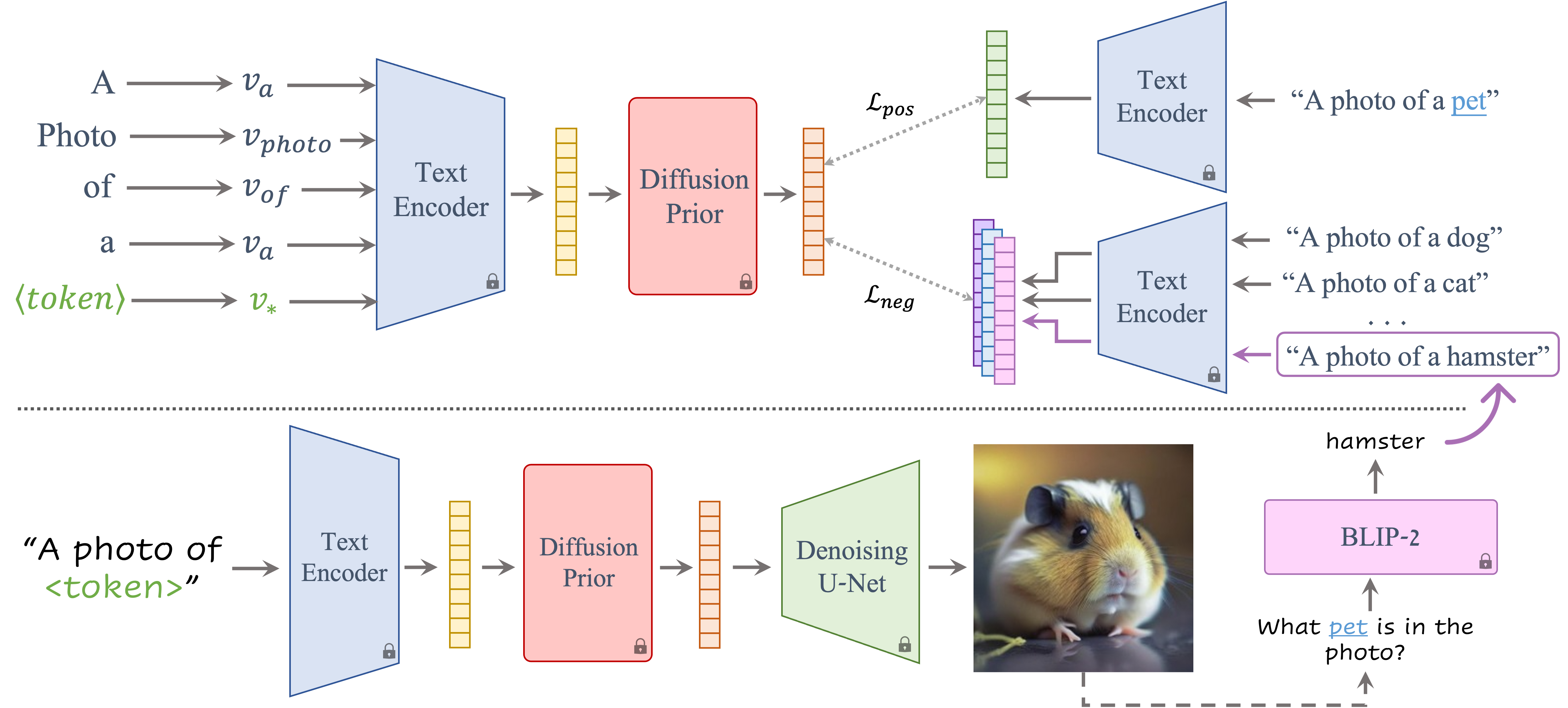

- We optimize a single embedding \(\mathcal{v_*}\) representing the novel concept we wish to generate (e.g., a new type of "pet").

- We compute a set of constraints in the output space of a Diffusion Prior model, guiding the learned embedding \(\mathcal{v_*}\) to be similar to the target category while being different from existing members (e.g., "dog", "cat").

- We use VLM guidance from a pretrained BLIP-2 model to gradually expand the set of negative classes, resulting in more creative generations.

What Can it Do?

Creative Generation

Using our Adaptive Negatives scheme, users can generate unique, never-before-seen concepts. With ConceptLab, you can create new superheros, design new buildings, dream up a new pet, and even compose brand new art styles.

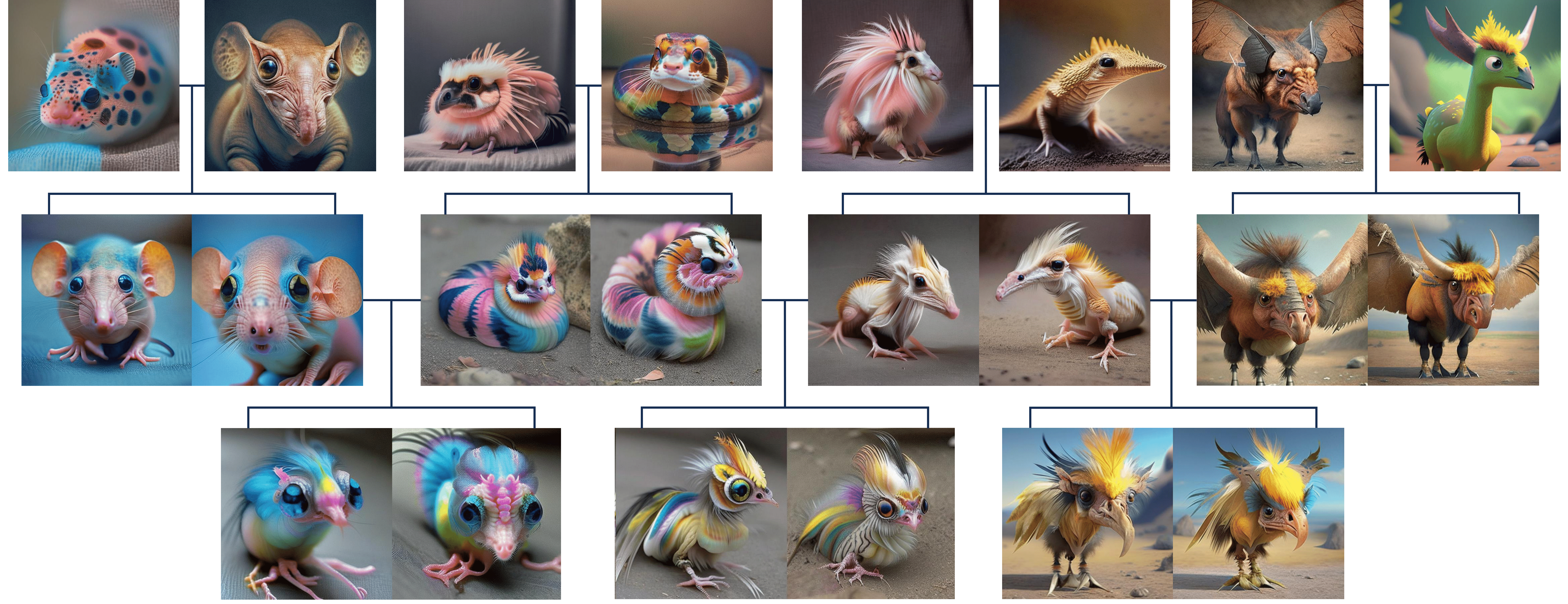

Evolutionary Generation

ConceptLab can be used to mix up generated concepts to iteratively learn new unique creations. This process can be repeated to create further "Generations", each one being a hybrid between the previous two.

Concept Mixing

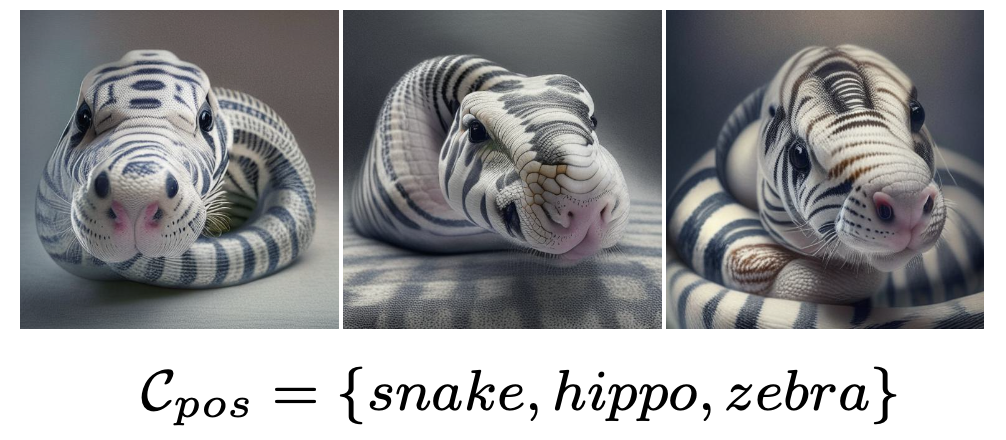

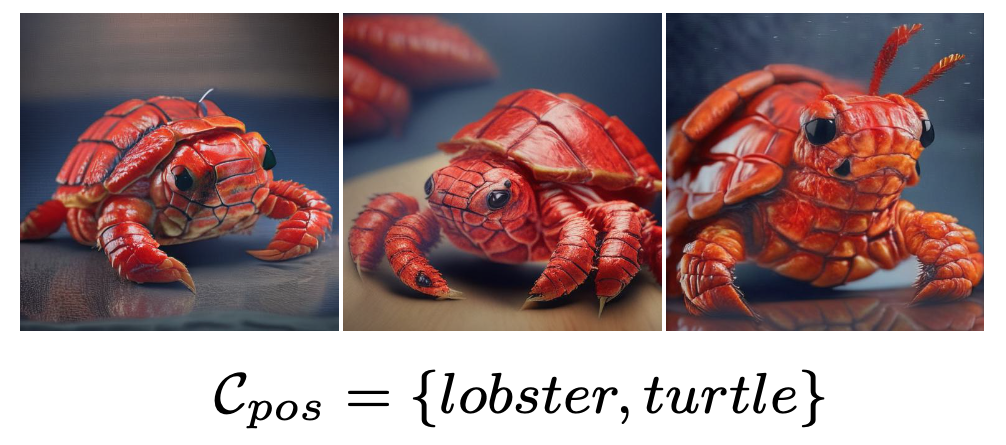

With ConceptLab, we can also form hybrid concepts by merging unique traits across multiple real concepts. This can be done by defining multiple positive concepts, allowing us to create unique creations such as a lobs-turtle, pine-melon, and more!